CreativeExplorer-MichaelMandaville.com

I work in Film. I live in Martial Arts. I thrive in Imagination.

Filmmaking & Writing

Scholar Warrior Way

Current Events

Grok on Redistricting

I found this website which seems neutral on its explanations called Redistrictingdatahub.com

What is redistricting?

Redistricting is the process of redrawing electoral boundaries. It occurs for all levels of representative government, including the US House of Representatives, state legislatures, county and city government, schools districts, and more. These boundaries define the constituency that will be represented in government and the district in which a candidate for office runs.

I actually asked Grok about how to create a neutral unbiased Redistricting map. Here's the explanation:

To achieve truly random redistricting across all 50 U.S. states every two years (e.g., aligned with federal election cycles or census updates), you can leverage geographic information systems (GIS), computational geometry, and randomization algorithms. The core idea is to overlay a fine-grained grid on each state's geometry, then use a cryptographically secure random process to aggregate grid cells into districts of equal population. This ensures compactness, contiguity, and population equality while eliminating human bias.Below is a step-by-step technical framework using open-source tools (like Python with libraries such as GeoPandas, Shapely, and NumPy) that could be scaled nationally via cloud computing. This can be automated to run biennially with updated Census data.1. Data Preparation (One-Time Setup per Cycle)

Obtain State Boundaries and Population Data:

Download state shapefiles from the U.S. Census Bureau TIGER/Line database (free, updated post-decennial census or annually for estimates).

Use block-level population data (smallest unit, ~100 people per block) from the Census API or PL 94-171 redistricting files.

Preprocess:

For each state, clip population blocks to the state boundary.

Project everything to a equal-area CRS (e.g., Albers Equal Area Conic for the U.S.: EPSG:5070) to preserve area/population accuracy.

2. Overlay a Randomized Grid

Grid Generation:

Define a fine grid resolution based on state size and desired district count. For example:

Grid cell size: 500m × 500m (adjustable; finer for precision, coarser for speed).

Use GeoPandas to create a fishnet grid over the state's bounding box.

Randomize the Grid Origin (to prevent fixed patterns):

Generate a cryptographically secure random offset (x, y) within one cell's dimensions using secrets module or /dev/urandom.

Shift the entire grid by this offset. This ensures the grid doesn't align predictably with geography or demographics.

Assign Population to Grid Cells:

Spatially join Census blocks to grid cells.

Apportion block population to intersecting cells proportionally by area (using Shapely's intersection and area).

Python Snippet Example (for one state; loop over all 50):

python

import geopandas as gpd

import numpy as np

from shapely.geometry import Polygon

import secrets

# Load state boundary and blocksstate = gpd.read_file('tl_2020_us_state.shp').query("NAME == 'California'").to_crs(epsg=5070)blocks = gpd.read_file('tl_2020_06_tabblock20.shp').to_crs(epsg=5070)# CA example

# Random grid offset (0 to cell_size)cell_size =500# metersrand_x = secrets.randbelow(cell_size)rand_y = secrets.randbelow(cell_size)

# Create shifted gridxmin, ymin, xmax, ymax = state.total_bounds

x_coords = np.arange(xmin + rand_x, xmax, cell_size)y_coords = np.arange(ymin + rand_y, ymax, cell_size)grid_cells =[Polygon([(x,y),(x+cell_size,y),(x+cell_size,y+cell_size),(x,y+cell_size)])for x in x_coords for y in y_coords]grid = gpd.GeoDataFrame(geometry=grid_cells, crs=state.crs)grid = grid[grid.intersects(state.unary_union)]# Clip to state

# Assign population (simplified)grid['population']=0for idx, block in blocks.iterrows(): intersecting = grid[grid.intersects(block.geometry)]for g_idx, cell in intersecting.iterrows(): inter_area = cell.geometry.intersection(block.geometry).area

prop = inter_area / block.geometry.area

grid.at[g_idx,'population']+= block['POP20']* prop3. Random District Formation

Determine District Count and Target Population:

Districts per state: Based on congressional seats (from latest Census apportionment) + state legislative districts if needed.

Target pop per district: Total state pop / districts (allow ±1% deviation for integers).

Aggregation Algorithm (Random Seed-and-Grow):

Use a randomized flood-fill or hierarchical clustering to group contiguous grid cells.

Steps:

Randomly select seed cells (one per district) using weighted random sampling by population (higher-pop cells more likely as seeds).

Grow districts iteratively: Assign unassigned contiguous cells to the nearest district (by centroid distance) until population targets are met.

Enforce contiguity with union-find or BFS/DFS on grid adjacency graph.

If population imbalance >1%, perturb by swapping border cells randomly and recheck.

For true randomness: Seed the RNG with a public, verifiable source (e.g., NIST beacon or blockchain hash from the cycle's start date).

Alternative Algorithms for Variety:

Voronoi Partitioning: Generate random points (Poisson disk sampling for even spread), compute Voronoi diagram clipped to state, adjust points iteratively for pop equality.

Genetic Algorithm: Evolve random partitions, scoring on compactness (Polsby-Popper) and pop equality; select fittest.

4. Automation and Scaling for All States Every 2 Years

Pipeline Orchestration:

Use AWS/GCP batch jobs or Apache Airflow to run per-state in parallel.

Trigger biennially: Cron job pulls latest Census estimates (API: api.census.gov), runs on Feb 1 of even years post-elections.

Output: GeoJSON/Shapefiles of districts, uploaded to a public portal (e.g., GitHub or state election site).

Verification and Transparency:

Publish RNG seed (e.g., hash of prior election results) for reproducibility.

Run 100+ simulations per state; select one randomly or by neutrality metric (e.g., minimal efficiency gap).

Open-source the code (e.g., on GitHub under MIT license) for audits.

5. Challenges and Mitigations

Population Equality: Use optimization (e.g., PuLP linear programming) to fine-tune after random aggregation.

Compactness/Contiguity: Post-process with smoothing (e.g., convex hull) or reject non-compact runs.

VRA Compliance: If required, add constraints (e.g., preserve majority-minority areas via seeded protection); otherwise, pure randomness may violate—hybrid with human review.

Compute: ~1-2 hours per large state on a modern CPU; parallelize across 50 states in <1 day.

Legality: This would require constitutional amendments or state laws; tech alone can't enforce.

This system produces verifiable, bias-free maps. Tools like Dave's Redistricting App or Districtr already prototype similar ideas—extend them nationally with the above randomization. For a proof-of-concept, start with one state in Jupyter Notebook.

Grok on Redistricting

I found this website which seems neutral on its explanations called Redistrictingdatahub.com

What is redistricting?

Redistricting is the process of redrawing electoral boundaries. It occurs for all levels of representative government, including the US House of Representatives, state legislatures, county and city government, schools districts, and more. These boundaries define the constituency that will be represented in government and the district in which a candidate for office runs.

I actually asked Grok about how to create a neutral unbiased Redistricting map. Here's the explanation:

To achieve truly random redistricting across all 50 U.S. states every two years (e.g., aligned with federal election cycles or census updates), you can leverage geographic information systems (GIS), computational geometry, and randomization algorithms. The core idea is to overlay a fine-grained grid on each state's geometry, then use a cryptographically secure random process to aggregate grid cells into districts of equal population. This ensures compactness, contiguity, and population equality while eliminating human bias.Below is a step-by-step technical framework using open-source tools (like Python with libraries such as GeoPandas, Shapely, and NumPy) that could be scaled nationally via cloud computing. This can be automated to run biennially with updated Census data.1. Data Preparation (One-Time Setup per Cycle)

Obtain State Boundaries and Population Data:

Download state shapefiles from the U.S. Census Bureau TIGER/Line database (free, updated post-decennial census or annually for estimates).

Use block-level population data (smallest unit, ~100 people per block) from the Census API or PL 94-171 redistricting files.

Preprocess:

For each state, clip population blocks to the state boundary.

Project everything to a equal-area CRS (e.g., Albers Equal Area Conic for the U.S.: EPSG:5070) to preserve area/population accuracy.

2. Overlay a Randomized Grid

Grid Generation:

Define a fine grid resolution based on state size and desired district count. For example:

Grid cell size: 500m × 500m (adjustable; finer for precision, coarser for speed).

Use GeoPandas to create a fishnet grid over the state's bounding box.

Randomize the Grid Origin (to prevent fixed patterns):

Generate a cryptographically secure random offset (x, y) within one cell's dimensions using secrets module or /dev/urandom.

Shift the entire grid by this offset. This ensures the grid doesn't align predictably with geography or demographics.

Assign Population to Grid Cells:

Spatially join Census blocks to grid cells.

Apportion block population to intersecting cells proportionally by area (using Shapely's intersection and area).

Python Snippet Example (for one state; loop over all 50):

python

import geopandas as gpd

import numpy as np

from shapely.geometry import Polygon

import secrets

# Load state boundary and blocksstate = gpd.read_file('tl_2020_us_state.shp').query("NAME == 'California'").to_crs(epsg=5070)blocks = gpd.read_file('tl_2020_06_tabblock20.shp').to_crs(epsg=5070)# CA example

# Random grid offset (0 to cell_size)cell_size =500# metersrand_x = secrets.randbelow(cell_size)rand_y = secrets.randbelow(cell_size)

# Create shifted gridxmin, ymin, xmax, ymax = state.total_bounds

x_coords = np.arange(xmin + rand_x, xmax, cell_size)y_coords = np.arange(ymin + rand_y, ymax, cell_size)grid_cells =[Polygon([(x,y),(x+cell_size,y),(x+cell_size,y+cell_size),(x,y+cell_size)])for x in x_coords for y in y_coords]grid = gpd.GeoDataFrame(geometry=grid_cells, crs=state.crs)grid = grid[grid.intersects(state.unary_union)]# Clip to state

# Assign population (simplified)grid['population']=0for idx, block in blocks.iterrows(): intersecting = grid[grid.intersects(block.geometry)]for g_idx, cell in intersecting.iterrows(): inter_area = cell.geometry.intersection(block.geometry).area

prop = inter_area / block.geometry.area

grid.at[g_idx,'population']+= block['POP20']* prop3. Random District Formation

Determine District Count and Target Population:

Districts per state: Based on congressional seats (from latest Census apportionment) + state legislative districts if needed.

Target pop per district: Total state pop / districts (allow ±1% deviation for integers).

Aggregation Algorithm (Random Seed-and-Grow):

Use a randomized flood-fill or hierarchical clustering to group contiguous grid cells.

Steps:

Randomly select seed cells (one per district) using weighted random sampling by population (higher-pop cells more likely as seeds).

Grow districts iteratively: Assign unassigned contiguous cells to the nearest district (by centroid distance) until population targets are met.

Enforce contiguity with union-find or BFS/DFS on grid adjacency graph.

If population imbalance >1%, perturb by swapping border cells randomly and recheck.

For true randomness: Seed the RNG with a public, verifiable source (e.g., NIST beacon or blockchain hash from the cycle's start date).

Alternative Algorithms for Variety:

Voronoi Partitioning: Generate random points (Poisson disk sampling for even spread), compute Voronoi diagram clipped to state, adjust points iteratively for pop equality.

Genetic Algorithm: Evolve random partitions, scoring on compactness (Polsby-Popper) and pop equality; select fittest.

4. Automation and Scaling for All States Every 2 Years

Pipeline Orchestration:

Use AWS/GCP batch jobs or Apache Airflow to run per-state in parallel.

Trigger biennially: Cron job pulls latest Census estimates (API: api.census.gov), runs on Feb 1 of even years post-elections.

Output: GeoJSON/Shapefiles of districts, uploaded to a public portal (e.g., GitHub or state election site).

Verification and Transparency:

Publish RNG seed (e.g., hash of prior election results) for reproducibility.

Run 100+ simulations per state; select one randomly or by neutrality metric (e.g., minimal efficiency gap).

Open-source the code (e.g., on GitHub under MIT license) for audits.

5. Challenges and Mitigations

Population Equality: Use optimization (e.g., PuLP linear programming) to fine-tune after random aggregation.

Compactness/Contiguity: Post-process with smoothing (e.g., convex hull) or reject non-compact runs.

VRA Compliance: If required, add constraints (e.g., preserve majority-minority areas via seeded protection); otherwise, pure randomness may violate—hybrid with human review.

Compute: ~1-2 hours per large state on a modern CPU; parallelize across 50 states in <1 day.

Legality: This would require constitutional amendments or state laws; tech alone can't enforce.

This system produces verifiable, bias-free maps. Tools like Dave's Redistricting App or Districtr already prototype similar ideas—extend them nationally with the above randomization. For a proof-of-concept, start with one state in Jupyter Notebook.

Grok on Redistricting

I found this website which seems neutral on its explanations called Redistrictingdatahub.com

What is redistricting?

Redistricting is the process of redrawing electoral boundaries. It occurs for all levels of representative government, including the US House of Representatives, state legislatures, county and city government, schools districts, and more. These boundaries define the constituency that will be represented in government and the district in which a candidate for office runs.

I actually asked Grok about how to create a neutral unbiased Redistricting map. Here's the explanation:

To achieve truly random redistricting across all 50 U.S. states every two years (e.g., aligned with federal election cycles or census updates), you can leverage geographic information systems (GIS), computational geometry, and randomization algorithms. The core idea is to overlay a fine-grained grid on each state's geometry, then use a cryptographically secure random process to aggregate grid cells into districts of equal population. This ensures compactness, contiguity, and population equality while eliminating human bias.Below is a step-by-step technical framework using open-source tools (like Python with libraries such as GeoPandas, Shapely, and NumPy) that could be scaled nationally via cloud computing. This can be automated to run biennially with updated Census data.1. Data Preparation (One-Time Setup per Cycle)

Obtain State Boundaries and Population Data:

Download state shapefiles from the U.S. Census Bureau TIGER/Line database (free, updated post-decennial census or annually for estimates).

Use block-level population data (smallest unit, ~100 people per block) from the Census API or PL 94-171 redistricting files.

Preprocess:

For each state, clip population blocks to the state boundary.

Project everything to a equal-area CRS (e.g., Albers Equal Area Conic for the U.S.: EPSG:5070) to preserve area/population accuracy.

2. Overlay a Randomized Grid

Grid Generation:

Define a fine grid resolution based on state size and desired district count. For example:

Grid cell size: 500m × 500m (adjustable; finer for precision, coarser for speed).

Use GeoPandas to create a fishnet grid over the state's bounding box.

Randomize the Grid Origin (to prevent fixed patterns):

Generate a cryptographically secure random offset (x, y) within one cell's dimensions using secrets module or /dev/urandom.

Shift the entire grid by this offset. This ensures the grid doesn't align predictably with geography or demographics.

Assign Population to Grid Cells:

Spatially join Census blocks to grid cells.

Apportion block population to intersecting cells proportionally by area (using Shapely's intersection and area).

Python Snippet Example (for one state; loop over all 50):

python

import geopandas as gpd

import numpy as np

from shapely.geometry import Polygon

import secrets

# Load state boundary and blocksstate = gpd.read_file('tl_2020_us_state.shp').query("NAME == 'California'").to_crs(epsg=5070)blocks = gpd.read_file('tl_2020_06_tabblock20.shp').to_crs(epsg=5070)# CA example

# Random grid offset (0 to cell_size)cell_size =500# metersrand_x = secrets.randbelow(cell_size)rand_y = secrets.randbelow(cell_size)

# Create shifted gridxmin, ymin, xmax, ymax = state.total_bounds

x_coords = np.arange(xmin + rand_x, xmax, cell_size)y_coords = np.arange(ymin + rand_y, ymax, cell_size)grid_cells =[Polygon([(x,y),(x+cell_size,y),(x+cell_size,y+cell_size),(x,y+cell_size)])for x in x_coords for y in y_coords]grid = gpd.GeoDataFrame(geometry=grid_cells, crs=state.crs)grid = grid[grid.intersects(state.unary_union)]# Clip to state

# Assign population (simplified)grid['population']=0for idx, block in blocks.iterrows(): intersecting = grid[grid.intersects(block.geometry)]for g_idx, cell in intersecting.iterrows(): inter_area = cell.geometry.intersection(block.geometry).area

prop = inter_area / block.geometry.area

grid.at[g_idx,'population']+= block['POP20']* prop3. Random District Formation

Determine District Count and Target Population:

Districts per state: Based on congressional seats (from latest Census apportionment) + state legislative districts if needed.

Target pop per district: Total state pop / districts (allow ±1% deviation for integers).

Aggregation Algorithm (Random Seed-and-Grow):

Use a randomized flood-fill or hierarchical clustering to group contiguous grid cells.

Steps:

Randomly select seed cells (one per district) using weighted random sampling by population (higher-pop cells more likely as seeds).

Grow districts iteratively: Assign unassigned contiguous cells to the nearest district (by centroid distance) until population targets are met.

Enforce contiguity with union-find or BFS/DFS on grid adjacency graph.

If population imbalance >1%, perturb by swapping border cells randomly and recheck.

For true randomness: Seed the RNG with a public, verifiable source (e.g., NIST beacon or blockchain hash from the cycle's start date).

Alternative Algorithms for Variety:

Voronoi Partitioning: Generate random points (Poisson disk sampling for even spread), compute Voronoi diagram clipped to state, adjust points iteratively for pop equality.

Genetic Algorithm: Evolve random partitions, scoring on compactness (Polsby-Popper) and pop equality; select fittest.

4. Automation and Scaling for All States Every 2 Years

Pipeline Orchestration:

Use AWS/GCP batch jobs or Apache Airflow to run per-state in parallel.

Trigger biennially: Cron job pulls latest Census estimates (API: api.census.gov), runs on Feb 1 of even years post-elections.

Output: GeoJSON/Shapefiles of districts, uploaded to a public portal (e.g., GitHub or state election site).

Verification and Transparency:

Publish RNG seed (e.g., hash of prior election results) for reproducibility.

Run 100+ simulations per state; select one randomly or by neutrality metric (e.g., minimal efficiency gap).

Open-source the code (e.g., on GitHub under MIT license) for audits.

5. Challenges and Mitigations

Population Equality: Use optimization (e.g., PuLP linear programming) to fine-tune after random aggregation.

Compactness/Contiguity: Post-process with smoothing (e.g., convex hull) or reject non-compact runs.

VRA Compliance: If required, add constraints (e.g., preserve majority-minority areas via seeded protection); otherwise, pure randomness may violate—hybrid with human review.

Compute: ~1-2 hours per large state on a modern CPU; parallelize across 50 states in <1 day.

Legality: This would require constitutional amendments or state laws; tech alone can't enforce.

This system produces verifiable, bias-free maps. Tools like Dave's Redistricting App or Districtr already prototype similar ideas—extend them nationally with the above randomization. For a proof-of-concept, start with one state in Jupyter Notebook.

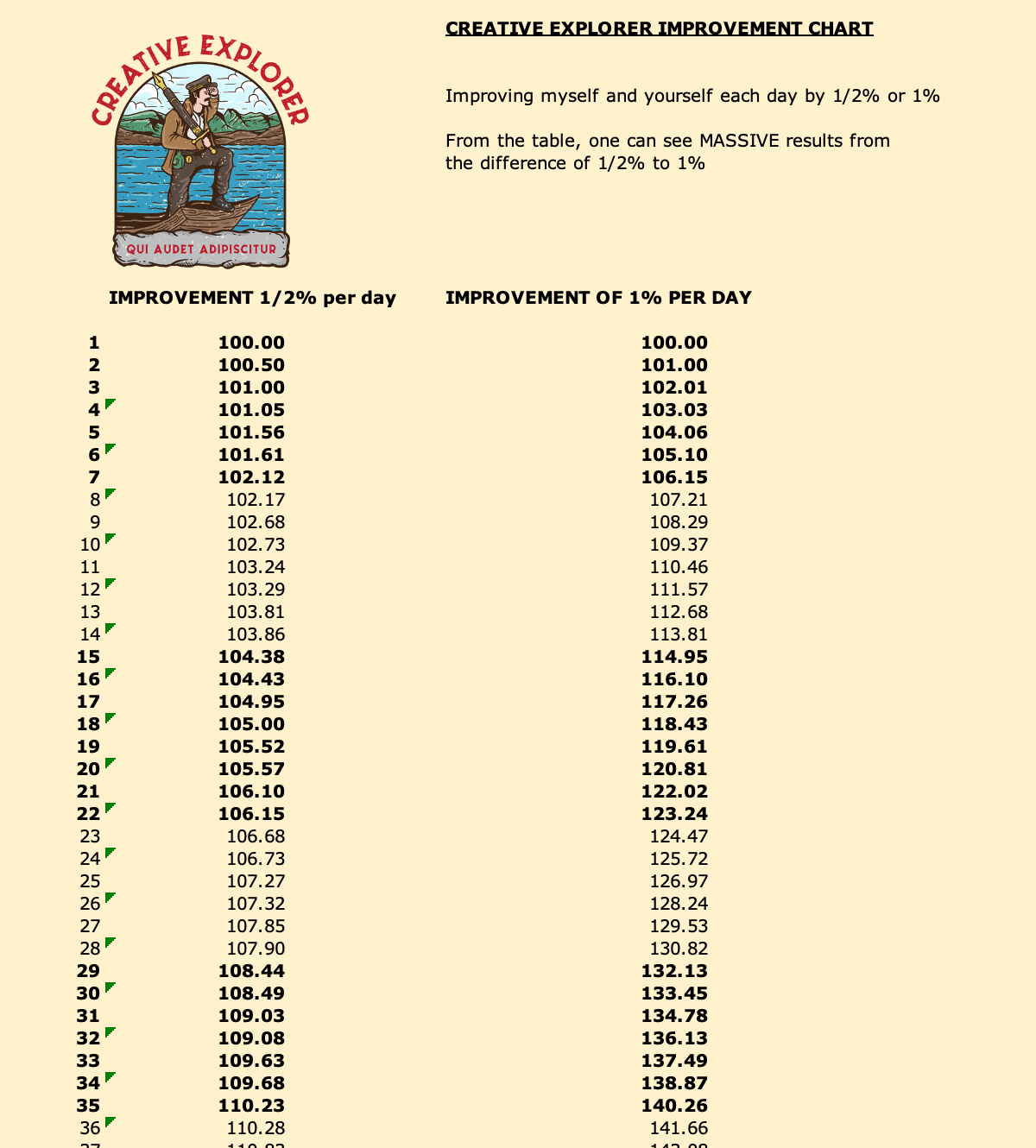

Did You Know...

... if you improve 1/2% each day, then you will be 267% better over one year? Who can compete with that?

Setting Yourself Up for Success usually doesn't mean one big jump but rather incremental change day after day. Think of it like a ocean wave, moving far out in the Pacific with almost an undetectable push...finally with the power and grace of an ocean wave onto the beach. Create your own metaphor to embrace small habits with a huge future impact.

Facebook

Instagram

X

LinkedIn

Youtube

Website